RAG Stores

The RAG (Retrieval-Augmented Generation) Stores page allows you to create, manage, and populate document collections that your AI agents can use for grounding. This enables agents to answer questions and generate responses based on the specific content you provide, rather than relying solely on their general knowledge.

Each store corresponds to a RAG Corpus in Google's Vertex AI. When you upload files, they are added to this corpus and become searchable by the agent. You will need a google cloud (GCP) account, and have GCS bucket and Vertex AI enabled in your account.

Adding a New RAG Store

To create a new store, expand the "New Store" section and fill out the form.

- Store Name: A unique, descriptive name for your document collection (e.g., "Product Manuals", "Internal Knowledge Base"). This cannot be changed after creation.

- Status: Toggles the store on or off for all associated agents.

- Agent(s): Select one or more agents that will have access to this store. An agent can only be assigned to one store at a time.

- Description: A brief summary of the content and purpose of the store.

- Store Bucket Name: Name of the bucket. Name can include dashes

- - Store Connector: Connector to be used to enable communication to non-public buckets.

- Embedding Model: The AI model used to convert your documents into vector embeddings for searching. This is a critical setting and cannot be changed after the store is created.

- Top K: The maximum number of relevant document chunks to retrieve and provide to the LLM as context.

- Distance Threshold: A value between 0.0 and 1.0 that determines how similar a document chunk must be to the user's query to be considered a match. A lower value means a stricter match.

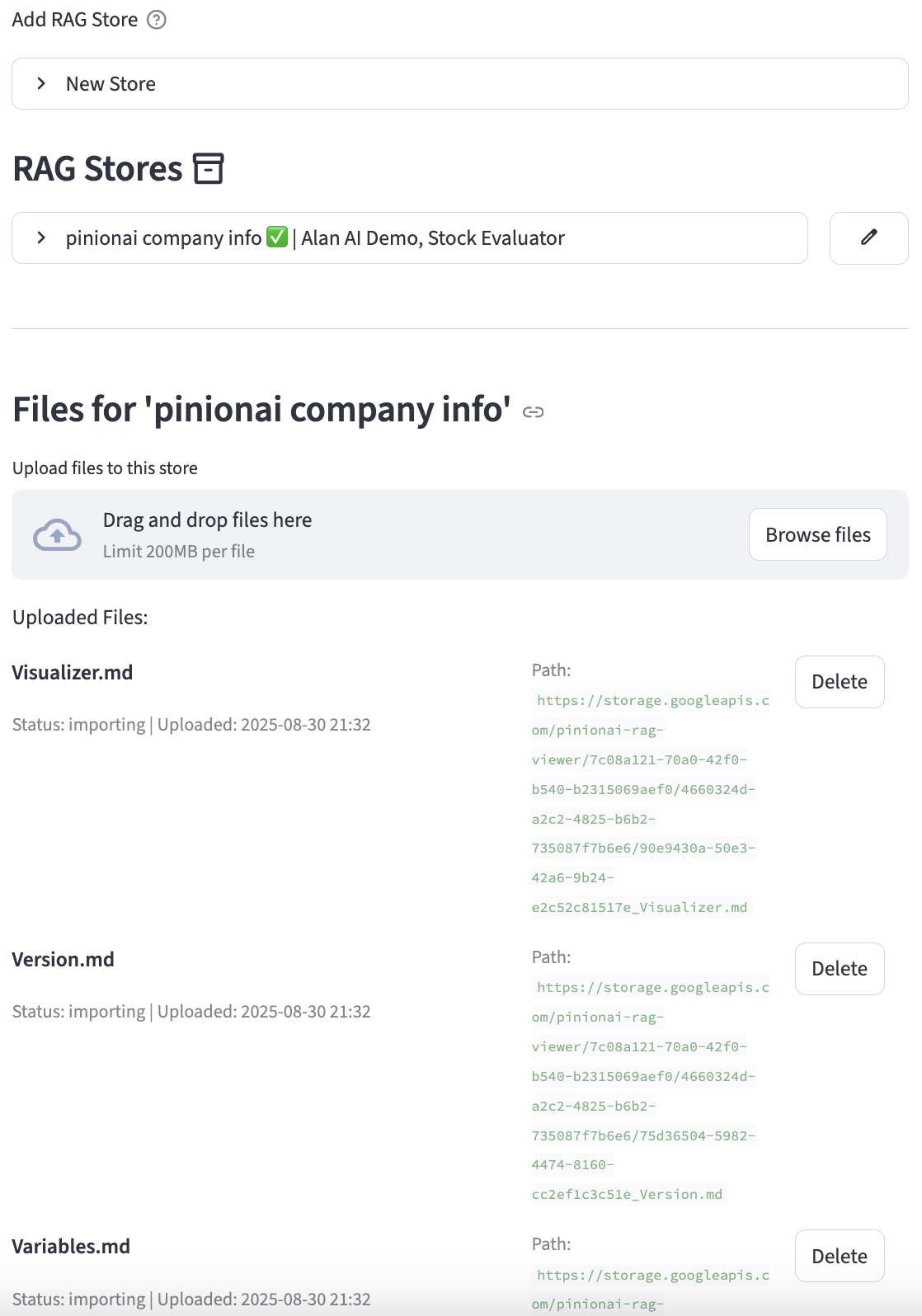

Managing Stores and Files

Once created, each store will appear in a list. You can expand any store to manage its settings and files.

- Shortcut Edit Button (

:material/edit:): Located on the right of the store's header, this button immediately opens the edit form for that store. - Edit Button (inside expander): An alternative edit button located inside the expanded view.

- Delete Button (

:material/delete:): This is a destructive action. It will: - Attempt to delete the corresponding RAG Corpus from Vertex AI.

- Delete all associated files from the GCS bucket.

- Delete the store and its file records from the PinionAI database.

Managing Files

Inside each store's expander, you will find the file management section.

- File Uploader: Drag and drop files or use the browser to select one or more files to add to the store.

- Upload Process: When you upload a file, the system performs several steps:

- The file is uploaded to a secure Google Cloud Storage (GCS) bucket.

- A record of the file is created in the PinionAI database with a status of

importing. - A job is initiated to import the file from GCS into the Vertex AI RAG Corpus. This process can take a few minutes.

- Once the import is complete, the file's content will be available for the agent to search.

- File List: Displays all files that have been uploaded to the store, along with their status and upload date.

- Delete File: Deleting a file will remove it from GCS and the database. Important: This action does not automatically remove the file from the Vertex AI RAG Corpus. You must do this manually through the Google Cloud Console to ensure it is no longer used for retrieval.

Quick Help - Embedding Models

Several embedding models are available on Vertex AI, including those associated with the Gemini family of models.

Key text embedding models include:

- gemini-embedding-001:

- This model is designed for English, multilingual, and code tasks.

- It combines the capabilities of

text-embedding-005(English and code) andtext-multilingual-embedding-002(multilingual). - It outputs vectors with up to 3072 dimensions, which can be adjusted using the

output_dimensionalityparameter. - It supports one instance per request and a maximum input length of 2048 tokens.

- text-embedding-004:

- This model is suitable for English text embedding and is often used with Vertex AI Vector Search.

- It outputs 768-dimensional vectors.

- It has an input token limit of 2048 tokens, with truncation of longer input.

- text-multilingual-embedding-002:

- This model is recommended for multilingual tasks, including use with RAG (Retrieval-Augmented Generation) corpus.

- It outputs 768-dimensional vectors.

- It has an input token limit of 2048 tokens, with truncation of longer input.

- text-embedding-005:

- This model specializes in English and code tasks and may be recommended as a default embedding model for RAG corpus.

- It outputs up to 768-dimensional vectors.

- gemini-embedding-exp-03-07:

- This experimental Gemini embedding model is available to developers through the API.

Multimodal embeddings

The multimodalembedding@001 model is available for combining different data types (images, text, video). It generates 1408-dimension vectors. It supports tasks like image classification and video content moderation. The multimodal embedding model has an input token limit of 1408 tokens for image and text data.

Open-source embedding models

Vertex AI Model Garden also supports open-source embedding models such as e5-base-v2, e5-large-v2, e5-small-v2, multilingual-e5-large, and multilingual-e5-small.

Important considerations

- When selecting an embedding model, consider the specific task (e.g., English text, multilingual text, multimodal data), the desired output dimensionality, and the input token limits.

- Fine-tuning embedding models with your specific data might be necessary for optimal results in certain scenarios, especially with Retrieval-Augmented Generation (RAG).

- Refer to the Google Cloud documentation https://cloud.google.com/vertex-ai/generative-ai/docs/embeddings/get-text-embeddings for the most current information on supported models, their capabilities, and usage details.