Files

The Files page provides a powerful interface to define operations for reading from and writing to files. This allows your AI agent to interact with various storage systems like Google Cloud Storage (GCS), AWS S3, and the local filesystem. It can be used to persist data from a conversation, load configuration or data for a task, or exchange information with other systems through files.

Prerequisites

Before you can manage File operations, ensure the following conditions are met:

- You must be logged into the application.

- Your user role must have the necessary permissions (

owner,admin,editor, orfiles). - You must have an Account selected from the sidebar.

- You must have an Agent selected from the sidebar. File operations are managed on a per-agent basis.

Page Overview

The Files page is divided into three main sections:

- Add File Operation: An expandable form for creating new file read/write configurations.

- Files List: A list of existing file operations associated with the selected agent.

- Assign File Operation: A tool to associate an existing file operation (from your account) with the current agent.

Creating a New File Operation

To create a new file operation, click on the "New File Operation" expander to reveal the creation form.

Helper Buttons

- Add Variable: Opens a dialog to create a new variable that can be used within your file operation configurations (e.g., for dynamic paths or as source/destination variables).

- Lookup Names: Opens a dialog to look up the exact names of Connectors and Variables available for the selected agent.

Configuration Fields

Here is a breakdown of the fields in the "New File Operation" form:

- Name: A unique, descriptive name for the file operation (e.g.,

save_invoice_to_gcs,read_user_preferences). - Description: A detailed description of what this operation does.

- Status: A toggle to enable (

True) or disable (False) this operation. - Agent(s): A multi-select dropdown to associate this operation with one or more agents.

- Direction: Specifies whether the operation is for reading, writing, or both.

read: Reads data from the specified path into a variable.write: Writes data from a variable to the specified path.both: Can perform both, depending on which variables are set during execution.- Provider: The storage backend to interact with.

gcs: Google Cloud Storage.s3: Amazon S3.local: The local filesystem of the server running the agent.- Bucket Name: (For

gcsands3) The name of the storage bucket. - Path Template: A flexible way to define the file path. You can embed variables to create dynamic paths, for example:

invoices/{var["customer_id"]}/{var["invoice_number"]}.pdf. - File Format: The format to use when writing data from a variable, and the format to expect when reading. This ensures data is correctly serialized and deserialized.

text: For plain text content. This is the default.json: For structured data like dictionaries and lists. When writing, it converts a Python dict/list to a JSON string. When reading, it parses a JSON file into a Python dict/list.csv: For tabular data. When writing, it converts a list of dictionaries into CSV format. When reading, it parses a CSV file into a list of dictionaries.xml: For XML data. When writing, it converts a dictionary to an XML string. When reading, it parses an XML file into a dictionary.binary: For raw byte content. Use this for non-text files like images, PDFs, or audio. The source variable must contain bytes when writing.- Connector: An optional Connector to use for authenticating with the cloud storage provider (e.g., a GCS Service Account or AWS IAM connector).

- Source Variable: (For

writeoperations) The name of the session variable that holds the content you want to save to the file. - Destination Variable: (For

readoperations) The name of the session variable where the content of the read file will be stored.

Once all fields are configured, click Create File Operation.

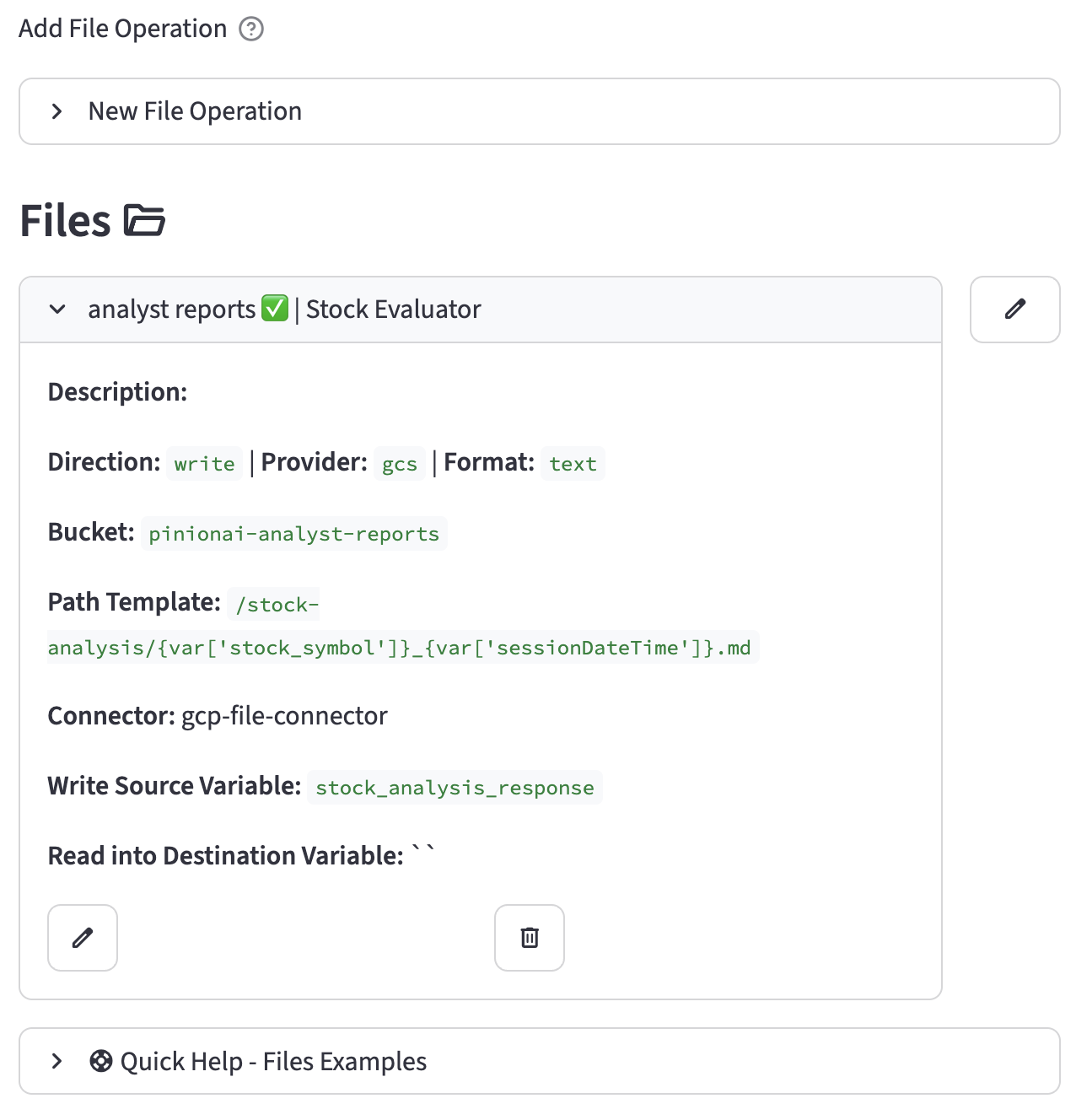

Managing Existing File Operations

Below the "Add File Operation" section, all file operations associated with the currently selected agent are listed.

- Each operation is displayed in its own expander, showing its name, status, and associated agents.

- Expanding an operation reveals all of its configured details, including provider, direction, format, and path.

- On the right of each operation's title bar, you will find two icons:

- :material/edit: Edit: Click this to open the edit form for that operation.

- :material/delete: Delete: Click this to permanently delete the operation. A confirmation will be required.

Editing a File Operation

Clicking the Edit icon opens a form identical to the "Add File Operation" form, but pre-populated with the selected operation's data. You can modify any field and then click Save Changes to update the operation or Cancel to discard your changes.

Assigning a File Operation to an Agent

At the bottom of the page, you may see a form titled "Assign existing File operation to '[agent_name]':".

- This tool is for associating a file operation that already exists in your account but is not yet assigned to the currently selected agent.

- The dropdown lists all such available operations.

- Select an operation from the list and click Assign File Operation to link it to the current agent.

Configuration Examples

Example 1: Writing a JSON API Response to S3

This operation takes a dictionary stored in a variable (e.g., from an API call) and saves it as a structured JSON file in an S3 bucket.

- Name:

save_order_details_to_s3 - Direction:

write - Provider:

s3 - Bucket Name:

my-company-orders - Path Template:

processed/{var["order_id"]}.json - File Format:

json - Source Variable:

api_order_response

Example 2: Reading a CSV Product List from a Local Path

This operation reads a CSV file from the local server and loads its content into a variable as a list of dictionaries, which can then be used by the agent.

- Name:

load_product_catalog - Direction:

read - Provider:

local - Path Template:

/app/data/product_catalog.csv - File Format:

csv - Destination Variable:

product_list

Example 3: Writing a Generated Image to GCS

This operation takes binary image data stored in a variable and uploads it to a Google Cloud Storage bucket.

- Name:

upload_generated_chart - Direction:

write - Provider:

gcs - Bucket Name:

my-analytics-charts - Path Template:

daily_reports/{var["report_date"]}/{var["chart_name"]}.png - File Format:

binary - Source Variable:

generated_chart_bytes